412. Designing a Voice-Enabled Agentic Decision Framework for Real-Time User Interaction

In an age where users expect fast, personalized, and intelligent support, traditional voice assistants fall short when they simply transcribe input or rely on static responses. What if we could build a voice-enabled system that actually listens, reasons, adapts, and responds like a human — in real time?

This is where agentic AI comes into play.

In this article, I walk through a production-grade Voice-Enabled Agentic Decision Framework I recently helped design and implement. This architecture handles natural voice input, processes intent intelligently, adapts behavior based on confidence and context, and resolves issues — autonomously or through graceful human handoff.

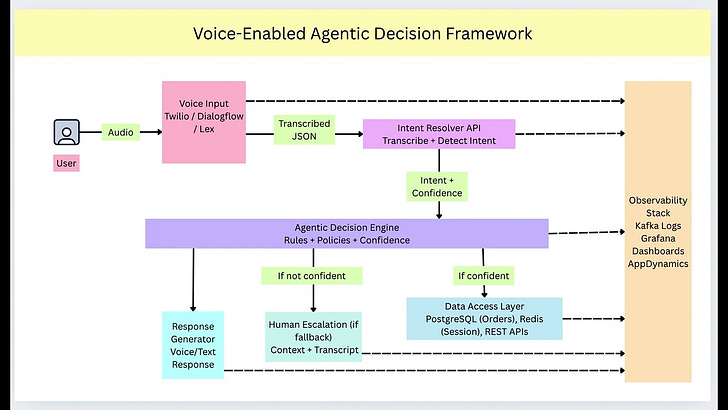

🧱 Overview of the Architecture

The system is built as a modular, reactive pipeline with the following components:

Voice Interface (Input capture)

Intent Resolver API (Speech-to-text + intent detection)

Agentic Decision Engine (Reasoning and control)

Data Access Layer (Databases and APIs)

Human Escalation Path (Fallback with context)

Response Generator (Voice or text output)

Logging & Observability Stack (Monitoring, debugging, feedback loop)

Each component is loosely coupled, highly observable, and designed for latency-sensitive, real-time interactions.

🎧 1. Voice Interface – Capturing Natural Input

At the front line is the voice input system. We used cloud-native voice interfaces like:

Twilio Voice SDK

Google Dialogflow

Amazon Lex

These tools convert spoken input into structured payloads — typically JSON-formatted transcripts. Example:

{ "transcript": "Check my refund status", "language": "en-US" }This is the entry point to our agentic pipeline.

🧠 2. Intent Resolver – Understanding the User

Next, we pass this input to an Intent Resolver API. This could be:

A Dialogflow agent

A fine-tuned BERT-based model

A hybrid rule-based parser

Its job: extract the intent and confidence score.

Example output:

{ "intent": "check_refund_status", "confidence": 0.92 }If confidence is above a threshold, we proceed. If not, we prepare for fallback.

🧭 3. Agentic Decision Engine – Reasoning & Control

The Decision Engine is the brain of the system. It receives the structured intent and makes a decision:

Is the confidence high enough?

Do we have the required context?

Can we resolve this without a human?

If yes, it proceeds to fetch data. If no, it initiates escalation.

The logic uses:

Rule-based workflows

Confidence scoring thresholds

History/session state (from Redis)

Error recovery logic

This component ensures the user journey is not only autonomous — but also safe and reliable.

🗂 4. Data Access Layer – Getting What We Need

If the agent can resolve the request, it queries necessary data:

PostgreSQL for transactional records

Redis for current session context

External REST APIs for third-party systems

Example: When a user asks for a refund status, the engine pulls recent transaction records using the user ID. All queries are logged and sanitized for security and traceability.

🚨 5. Human Escalation – Graceful Fallback

If the confidence is low or the issue is too complex, we escalate. But not like old IVR systems that start from scratch.

We pass:

Full transcript

Detected intent + score

Session context

User metadata

This gives human agents a head start. Often, users don’t even realize they’ve been handed off — the experience remains seamless.

🔊 6. Response Generator – Delivering the Outcome

Once the resolution path is complete, the system uses:

Text-to-speech (TTS)

SMS/email APIs

App push notifications

to deliver the final response:

“I’ve found your order — the refund has been processed and should arrive in 3–5 business days.”

This response is generated dynamically, tailored to the user, and context-aware.

📊 7. Logging & Observability – Closing the Loop

Every step in the flow is logged in real time using:

This observability stack helps identify:

Drop-offs

Latency spikes

Agent confusion (e.g., low confidence loops)

Fallback frequency

We used this insight to retrain models, improve fallback logic, and fine-tune threshold values.

🚀 Innovation & Impact

✅ What Makes This Agentic?

Unlike static bots, this system:

Thinks: Uses rules + context + confidence

Adapts: Changes path based on signal strength

Learns: Observability closes the loop

Balances: Automates while gracefully escalating

⚡ Real-World Outcomes

⏱ Reduced average voice agent resolution time by 35%

📉 Reduced human escalation rate by 20%

🌟 Improved user satisfaction scores in surveys

🔄 Enabled rapid experimentation using logs + feedback loop

👩💻 My Role

As the Senior Software Engineer, I led:

Design and development of the decision engine (Python + Django)

Kafka stream processing for event tracking

Redis-based session caching for low-latency lookup

Logging + monitoring pipeline with Logback, Logstash, and ELK

Integration with REST APIs and front-end triggers

I also collaborated cross-functionally with:

Product teams (to define agent behavior)

Design teams (for escalation experience)

Data teams (to validate KPIs and feedback metrics)

🧩 Final Thoughts

Building intelligent, voice-enabled, real-time systems isn’t just about connecting APIs — it’s about understanding user intent, balancing autonomy with safety, and using feedback to make every interaction better.